by Aryan Mehra

with Farnaz Karimdady Sharifabad, Prasanna Vijayanathan, Chaïna Wade, Vishal Sharma and Mike Schassberger

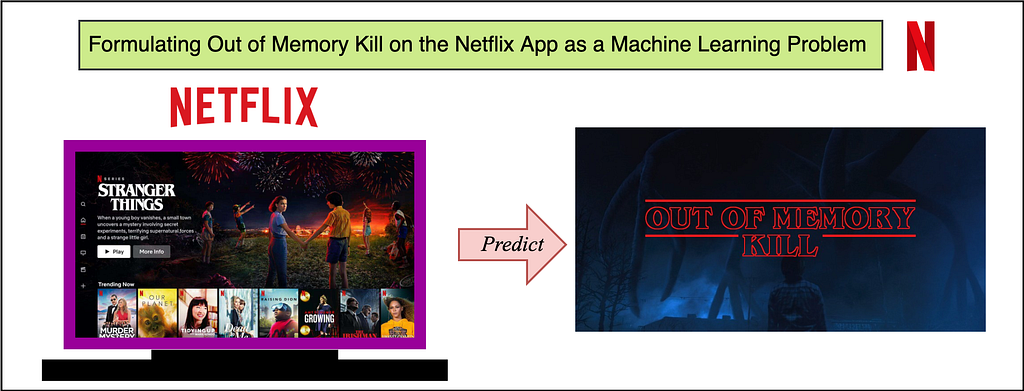

Aim and Purpose — Problem Statement

The purpose of this vendible is to requite insights into analyzing and predicting “out of memory” or OOM kills on the Netflix App. Unlike strong compute devices, TVs and set top boxes usually have stronger memory constraints. Increasingly importantly, the low resource availability or “out of memory” scenario is one of the worldwide reasons for crashes/kills. We at Netflix, as a streaming service running on millions of devices, have a tremendous value of data well-nigh device capabilities/characteristics and runtime data in our big data platform. With large data, comes the opportunity to leverage the data for predictive and nomenclature based analysis. Specifically, if we are worldly-wise to predict or unriddle the Out of Memory kills, we can take device specific deportment to pre-emptively lower the performance in favor of not crashing — aiming to requite the user the ultimate Netflix Wits within the “performance vs pre-emptive action” tradeoff limitations. A major wholesomeness of prediction and taking pre-emptive action, is the fact that we can take deportment to largest the user experience.

This is washed-up by first elaborating on the dataset curation stage — specially focussing on device capabilities and OOM skiver related memory readings. We moreover highlight steps and guidelines for exploratory wringer and prediction to understand Out of Memory kills on a sample set of devices. Since memory management is not something one usually toadies with nomenclature problems, this blog focuses on formulating the problem as an ML problem and the data engineering that goes withal with it. We moreover explore graphical wringer of the labeled dataset and suggest some full-length engineering and verism measures for future exploration.

Challenges of Dataset Curation and Labeling

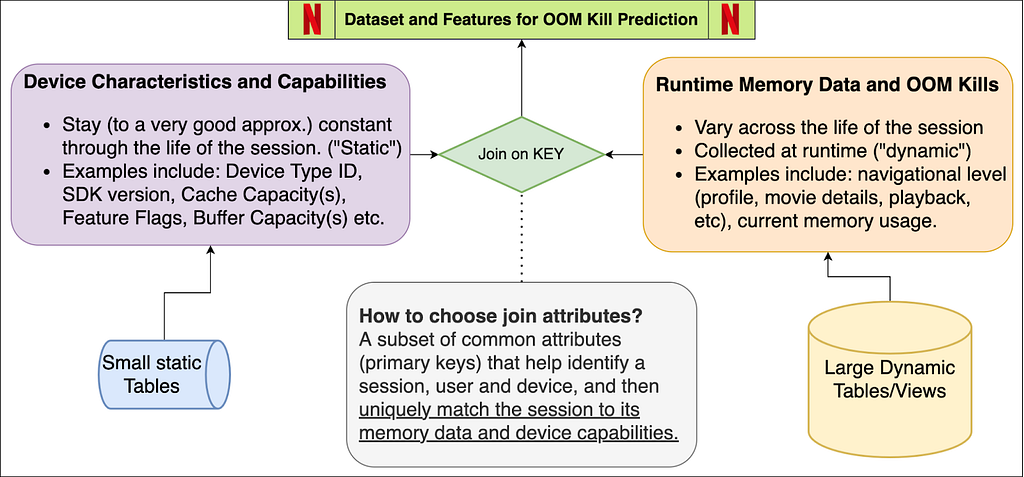

Unlike other Machine Learning tasks, OOM skiver prediction is tricky considering the dataset will be polled from variegated sources — device characteristics come from our infield knowledge and runtime memory data comes from real-time user data pushed to our servers.

Secondly, and increasingly importantly, the sheer volume of the runtime data is a lot. Several devices running Netflix will log memory usage at stock-still intervals. Since the Netflix App does not get killed very often (fortunately!), this ways most of these entries represent normal/ideal/as expected runtime states. The dataset will thus be very biased/skewed. We will soon see how we unquestionably label which entries are erroneous and which are not.

Dataset Features and Components

The schema icon whilom describes the two components of the dataset — device capabilities/characteristics and runtime memory data. When joined together based on nature that can uniquely match the memory entry with its device’s capabilities. These nature may be variegated for variegated streaming services — for us at Netflix, this is a combination of the device type, app session ID and software minutiae kit version (SDK version). We now explore each of these components individually, while highlighting the nuances of the data pipeline and pre-processing.

Device Capabilities

All the device capabilities may not reside in one source table — requiring multiple if not several joins to gather the data. While creating the device sufficiency table, we decided to primary alphabetize it through a composite key of (device type ID, SDK version). So given these two attributes, Netflix can uniquely identify several of the device capabilities. Some nuances while creating this dataset come from the infield domain knowledge of our engineers. Some features (as an example) include Device Type ID, SDK Version, Buffer Sizes, Enshroud Capacities, UI resolution, Chipset Manufacturer and Brand.

Major Milestones in Data Engineering for Device Characteristics

Structuring the data in an ML-consumable format: The device sufficiency data needed for the prediction was distributed in over three variegated schemas wideness the Big Data Platform. Joining them together and towers a single indexable schema that can directly wilt a part of a worthier data pipeline is a big milestone.

Dealing with ambiguities and missing data: Sometimes the entries in BDP are contaminated with testing entries and NULL values, withal with zipped values that have no meaning or just simply contradictory values due to unreal test environments. We deal with all of this by a simple majority voting (statistical mode) on the view that is indexed by the device type ID and SDK version from the user query. We thus verify the proposition that very device characteristics are unchangingly in majority in the data lake.

Incorporating On-site and field knowledge of devices and engineers: This is probably the single most important victory of the task considering some of the features mentioned whilom (and some of the ones redacted) involved engineering the features manually. Example: Missing values or NULL values might midpoint the sparsity of a flag or full-length in some attribute, while it might require uneaten tasks in others. So if we have a missing value for a full-length flag, that might midpoint “False”, whereas a missing value in some buffer size full-length might midpoint that we need subqueries to fetch and fill the missing data.

Runtime Memory, OOM Skiver Data and ground truth labeling

Runtime data is unchangingly increasing and constantly evolving. The tables and views we use are refreshed every 24 hours and joining between any two such tables will lead to tremendous compute and time resources. In order to curate this part of the dataset, we suggest some tips given unelevated (written from the point of view of SparkSQL-like distributed query processors):

- Filtering the entries (conditions) surpassing JOIN, and for this purpose using WHERE and LEFT JOIN clauses carefully. Conditions that eliminate entries without the join operation are much increasingly expensive than when suppuration happens surpassing the join. It moreover prevents the system running out of memory during execution of the query.

- Restricting Testing and Wringer to one day and device at a time. It is unchangingly good to pick a single upper frequency day like New Years, or Memorial day, etc. to increase frequency counts and get normalized distributions wideness various features.

- Striking a wastefulness between suburbanite and executor memory configurations in SparkSQL-like systems. Too upper allocations may goof and restrict system processes. Too low memory allocations may goof at the time of a local collect or when the suburbanite tries to yaffle the results.

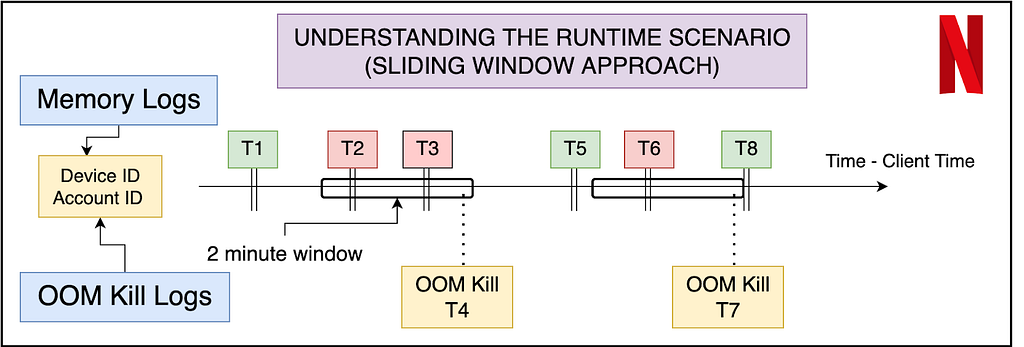

Labeling the data — Ground Truth

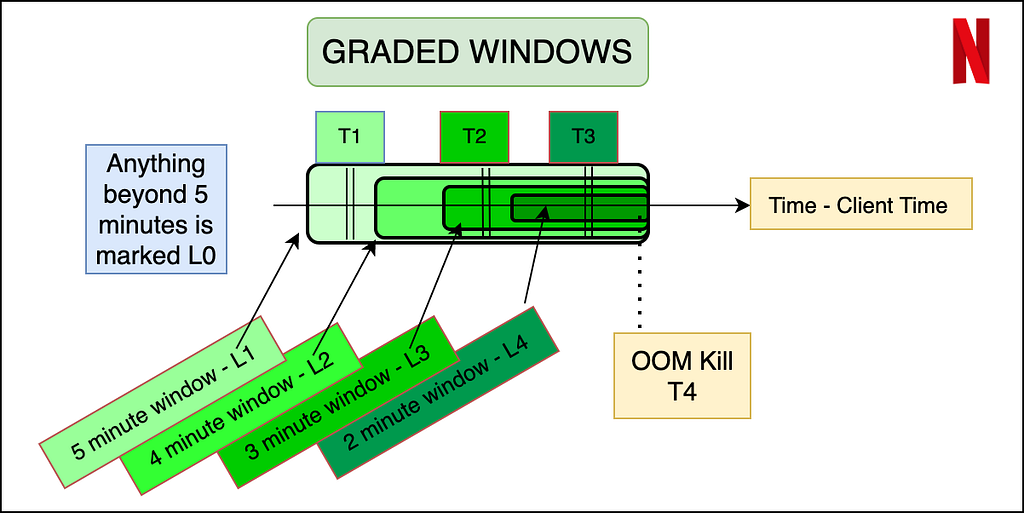

An important speciality of the dataset is to understand what features will be misogynist to us at inference time. Thus memory data (that contains the navigational level and memory reading) can be labeled using the OOM skiver data, but the latter cannot be reflected in the input features. The weightier way to do this is to use a sliding window tideway where we label the memory readings of the sessions in a stock-still window surpassing the OOM skiver as erroneous, and the rest of the entries as non-erroneous. In order to make the labeling increasingly granular, and bring increasingly variation in a binary nomenclature model, we propose a graded window tideway as explained by the image below. Basically, it assigns higher levels to memory readings closer to the OOM kill, making it a multi-class nomenclature problem. Level 4 is the most near to the OOM skiver (range of 2 minutes), whereas Level 0 is vastitude 5 minutes of any OOM skiver superiority of it. We note here that the device and session of the OOM skiver instance and the memory reading needs to match for the sanity of the labeling. Later the ravages matrix and model’s results can later be reduced to binary if need be.

Summary of OOM Prediction — Problem Formulation

The dataset now consists of several entries — each of which has unrepealable runtime features (navigational level and memory reading in our case) and device characteristics (a mix of over 15 features that may be numerical, boolean or categorical). The output variable is the graded or ungraded nomenclature variable which is labeled in vibrations with the section above — primarily based on the nearness of the memory reading stamp to the OOM kill. Now we can use any multi-class nomenclature algorithm — ANNs, XGBoost, AdaBoost, ElasticNet with softmax etc. Thus we have successfully formulated the problem of OOM skiver prediction for a device streaming Netflix.

Data Wringer and Observations

Without diving very deep into the very devices and results of the classification, we now show some examples of how we could use the structured data for some preliminary wringer and make observations. We do so by just looking at the peak of OOM kills in a distribution over the memory readings within 5 minutes prior to the kill.

Different device types

From the graph above, we show how plane without doing any modeling, the structured data can requite us immense knowledge well-nigh the memory domain. For example, the early peaks (marked in red) are mostly crashes not visible to users, but were marked erroneously as user-facing crashes. The peaks marked in untried are real user-facing crashes. Device 2 is an example of a sharp peak towards the higher memory range, with a ripen that is sharp and scrutinizingly no entries without the peak ends. Hence, for Device 1 and 2, the task of OOM prediction is relatively easier, without which we can start taking pre-emptive whoopee to lower our memory usage. In specimen of Device 3, we have a normalized gaussian like distribution — indicating that the OOM kills occur all over, with the ripen not stuff very sharp, and the crashes happen all over in an approximately normalized fashion.

Feature Engineering, Verism Measures and Future Work Directions

We leave the reader with some ideas to engineer increasingly features and verism measures specific to the memory usage context in a streaming environment for a device.

- We could manually engineer features on memory to utilize the time-series nature of the memory value when aggregated over a user’s session. Suggestions include a running midpoint of the last 3 values, or a difference of the current entry and running exponential average. The wringer of the growth of memory by the user could requite insights into whether the skiver was caused by in-app streaming demand, or due to external factors.

- Another full-length could be the time spent in variegated navigational levels. Internally, the app caches several pre-fetched data, images, descriptions etc, and the time spent in the level could indicate whether or not those caches are cleared.

- When deciding on verism measures for the problem, it is important to unriddle the stardom between false positives and false negatives. The dataset (fortunately for Netflix!) will be highly biased — as an example, over 99.1% entries are non-kill related. In general, false negatives (not predicting the skiver when unquestionably the app is killed) are increasingly detrimental than false positives (predicting a skiver plane though the app could have survived). This is considering since the skiver happens rarely (0.9% in this example), plane if we end up lowering memory and performance 2% of the time and reservation scrutinizingly all the 0.9% OOM kills, we will have eliminated approximately. all OOM kills with the tradeoff of lowering the performance/clearing the enshroud an uneaten 1.1% of the time (False Positives).

Note: The very results and ravages matrices have been redacted for confidentiality purposes, and proprietary knowledge well-nigh our partner devices.

Summary

This post has focussed on throwing light on dataset curation and engineering when dealing with memory and low resource crashes for streaming services on device. We moreover imbricate the stardom between non-changing nature and runtime nature and strategies to join them to make one cohesive dataset for OOM skiver prediction. We covered labeling strategies that involved graded window based approaches and explored some graphical wringer on the structured dataset. Finally, we ended with some future directions and possibilities for full-length engineering and verism measurements in the memory context.

Stay tuned for remoter posts on memory management and the use of ML modeling to deal with systemic and low latency data placid at the device level. We will try to soon post results of our models on the dataset that we have created.

Acknowledgements

I would like to thank the members of various teams — Partner Engineering (Mihir Daftari, Akshay Garg), TVUI team (Andrew Eichacker, Jason Munning), Streaming Data Team, Big Data Platform Team, Device Ecosystem Team and Data Science Engineering Team (Chris Pham), for all their support.

Formulating ‘Out of Memory Kill’ Prediction on the Netflix App as a Machine Learning Problem was originally published in Netflix TechBlog on Medium, where people are standing the conversation by highlighting and responding to this story.